Gary King is director of Harvard University’s Institute for Quantitative Social Science, which works to create tools and foster collaboration across the university and beyond. The Albert J. Weatherhead III University Professor, King is a specialist in data, statistics and the R statistical programming language, and he has written more than 130 journal articles, 20 open-source software packages and many books.

King, a political scientist, is also a pioneering classroom instructor: For teachers looking to bring new insights into the classroom, see his 2012 paper “How Social Science Research Can Improve Teaching” (PDF). He is also very active on Twitter on a variety of data, news media and academic topics (@kinggary.)

As part of our “research chat” interview series, Journalist’s Resource spoke with King about the Dataverse Network, an open-source application to publish, share, reference, extract and analyze research data. As data journalism continues to expand as a field, a variety of possible connections have arisen between the work of social scientists and media members. In any case, the rise of computational science has a variety of implications, on everything from elections to e-commerce.

The following is an edited interview conducted by Journalist’s Resource researcher Alex Remington:

_____

Journalist’s Resource: What was the idea behind Dataverse?

Gary King: Dataverse is a project to preserve and make available large amounts of data to lots of people.

Before Dataverse you would either send your data to an archive, like the ICPSR or the Roper Center for Survey Research, they’ll document it and put the data in preservation format, they’ll make backups and do all the things professional archivists do and individual scholars don’t. If some other scholar wants to get your data, they’ll get it from the archive but what they’ll often do is thank the archive. You might have spent three years crawling through bug-infested whatever and it would have been very hard to get the data, and the scholars are thanking the archive. The archive deserves thanks, but you deserve thanks too. So the credit system was not really working.

Alternately, if you wanted to make your data available but you wanted to get academic credit or Web visibility, you put it on your website. But the duration of data on people’s websites is a few years. Either you’ll move, or the website will go down, or the backup is basically on the computer underneath your desk and there’s a flood or whatever. And even if it’s there, and you can access the file, it’s in a format that after a few years, no one can access.

You basically had to choose between getting credit or making your data permanently available. What we did is we broke that choice. We solved a political problem technologically — and that’s what Dataverse does.

JR: If someone wants to put their data on Dataverse, how do they do that?

King: All scholars have pretty much the same structure of a website: lists of classes, publications, students, etc. We suggest that scholars add another page to their website, a list of datasets — your Dataverse. You’ll spent a few minutes giving us branding information for your website, you’ll put your data in the Dataverse network site, and it will serve up a page for you. Then when someone comes to your website, they’ll be able to click on an extra page and look at your Dataverse, which will list your datasets. The URL will be your site’s, but it’s permanently archived, served up by the Dataverse network. So, you get permanent archiving and you get the credit. There’s tremendous advantage of both and you don’t have to choose.

King: All scholars have pretty much the same structure of a website: lists of classes, publications, students, etc. We suggest that scholars add another page to their website, a list of datasets — your Dataverse. You’ll spent a few minutes giving us branding information for your website, you’ll put your data in the Dataverse network site, and it will serve up a page for you. Then when someone comes to your website, they’ll be able to click on an extra page and look at your Dataverse, which will list your datasets. The URL will be your site’s, but it’s permanently archived, served up by the Dataverse network. So, you get permanent archiving and you get the credit. There’s tremendous advantage of both and you don’t have to choose.

The database network at IQSS is now I think the largest collection of social-science data in the world. When people search for data, they’ll be able to find yours more easily. They’ll then get to your Dataverse, which is branded exactly like the rest of your website. We also developed a citation standard that makes it easy to cite your data in addition to the article or book that used the data. And at the same time you won’t have to worry about offsite backups and disaster recovery. That’s the basic idea of the Dataverse network.

In addition, every time there’s a new feature that is put into Dataverse, you get it too. At first your website just has a list of datasets, but ultimately you’ll see there’s tons of other services available on your website. People will be able to do online statistical analyses. They’ll be able to do subsets of the data. They’ll be able to cite subsets that someone else will be able to get back to. They’ll be able to do online statistical analyses and download a script and then rerun the script later. Dataverse is also connected to R, so every time a new method is put in you can run it straight from Dataverse. So Dataverse is a big open-source package that a lot of people contribute to now.

JR: How does Dataverse address search?

Gary King: We can search the metadata that describes the data and the documentation in the data. We can’t automatically merge diverse datasets. That’s not even theoretically possible. But we try to make things as visible as possible, that’s the main thing.

JR: What would be a good way to make Dataverse available to journalists?

Gary King: A curated list of datasets would be useful, even if you were the one wanting to write a Pulitzer Prize-winning story about something completely different — you still want the lay of the land, you still want to know what has been done before. For example, wouldn’t it be great to have a curated Dataverse that included datasets that had been used in newspaper articles? So somebody could come, without having to look through all the previous issues of the New York Times, and look through what data journalism looks like in America, or in the world. That would be incredibly useful.

JR: What sort of tools would Journalist’s Resource readers be interested in?

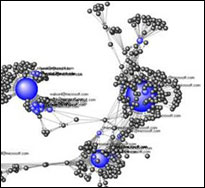

Gary King: There’s all kinds of tools to analyze quantitative data. This is what the media call “Big Data” these days and what we’ve called quantitative social science for many years. People are familiar with analyses of traditional quantitative data sets — for example, surveys, where each row is a person, each column is an attribute of the person or an answer to a survey question — but there are new things we didn’t think of as datasets that now can be analyzed. So, text is one that I’ve worked a lot on.

If you have all the WikiLeaks documents and wanted to figure out what’s in them, no human could read them all. But if you had enough people read little pieces of it, you’d still have to do something to put all of those impressions together. It just wouldn’t help. So we need something to analyze the text.

JR: I saw you have been analyzing blogs from China and what language is permissible and impermissible. I saw a lot of stories after the last presidential debates, with a word-frequency analysis.

Gary King: [Word-frequency analysis] will work sometimes and be a complete disaster other times. But yet we do it anyway. There are much better methods now.

JR: Are there some methods that will be more useful to a journalist than to a social scientist?

Gary King: I think a good social scientist and a good journalist ought to be able to communicate with each other.

JR: In other words, methods should be of equal utility to both?

Gary King: They’re not going to be, because some are going to be too technical — not that the journalist couldn’t figure it out, but that’s not what they’re focusing on. Ultimately, if the social scientist really understands the method and it’s really good, there’s no reason in the world why one group should use them and the other shouldn’t.

JR: Are there any particular methods that you feel ought to be used more often?

Gary King: I think text analytic methods are tremendously valuable. They make large quantities of unstructured information actionable. The idea that we can go on Twitter or look at blogs and read a few of them and think we understand what’s going on that day is delusional. The only conceivable way of doing it is with some type of automated text analysis. The simplest one, just counting words, gives you some sense sometimes and sometimes it’ll be completely wrong. There are more sophisticated methods that won’t make the obvious mistakes.

JR: What should data journalists do to build good databases for the public?

Gary King: Big Data is not actually about the collection of data. It’s about the production of data for the most part from organizations and programs and operations that were designed for completely different purposes. A company takes an HR system and moves it from paper to digital, and a side effect is data on all the employees that could produce really great analytics and provide a view into what the organization’s doing. It basically makes it possible to do something, but doesn’t actually do anything by itself.

The innovation in big data is big analytics, not big data. So what could data journalists do? You could recognize a source of data that wasn’t born as research data and make it available to researchers or to citizens. Of course, you may be able to find some really terrific things in there, like the employees that are getting paid without doing anything, or all kinds of other stuff.

JR:Are there things analytically that cannot be done by just crowdsourcing the masses? Are there analyses that can only be done by credentialed professionals?

Gary King: Take the Google search engine, for example. It’s a powerful tool that can be used by pretty much anybody. The reason why such an incredibly sophisticated tool works for totally unsophisticated people is that they can verify whether the thing that they’re doing is right. If you’re looking for a particular newspaper site or a fact, when you get the fact back, you can figure out whether that was the fact you were looking for. If you were asking something completely different, however — whether unemployment’s going to go up next month, for example — unsophisticated people are not going to be able to query a database, do an analysis, and know whether they’re right.

Gary King: Take the Google search engine, for example. It’s a powerful tool that can be used by pretty much anybody. The reason why such an incredibly sophisticated tool works for totally unsophisticated people is that they can verify whether the thing that they’re doing is right. If you’re looking for a particular newspaper site or a fact, when you get the fact back, you can figure out whether that was the fact you were looking for. If you were asking something completely different, however — whether unemployment’s going to go up next month, for example — unsophisticated people are not going to be able to query a database, do an analysis, and know whether they’re right.

If you were trying to find whether unemployment rate was going to be next month — the following is an actual story — and you counted the number of times people used relevant words like “jobs,” it might work. Then, as happened, the number of references to the word “jobs” increased and increased, and then some people started betting that unemployment was going up, and of course they didn’t realize that Steve Jobs had died. And the search was producing irrelevant numbers. Since there was no way of them validating the numbers, they just got the wrong answer and probably lost a lot of money.

So if you can make yourself vulnerable to being proven wrong, then you can use the method. Sometimes it’s easy and automatic to figure out whether you’re right or wrong, like the Google search engine. And sometimes you have to actually design procedures to figure out whether you’re right or wrong. And that’s what sophisticated scientists do when they use data. Journalists can do that too.

JR: “If your mother says she loves you, check it out.”

Gary King: Yeah!

JR: There’s a notion that there’s a democratization of data on the Internet. If enough people look at something, the presumption goes, you’ll find what’s in it.

Gary King: Well, you’ll find some things. If enough people look at the billion social media posts every two point some odd days, are they going to find everything that’s in them? I don’t care how many research assistants you have, how are they going to read a billion posts and summarize them in a valid way?

JR: Chris Anderson, editor of Wired magazine, wrote an article called, “The End of Theory: The Data Deluge Makes the Scientific Method Obsolete,” in which he writes, “The new availability of huge amounts of data, along with the statistical tools to crunch these numbers, offers a whole new way of understanding the world. Correlation supersedes causation, and science can advance even without coherent models, unified theories, or really any mechanistic explanation at all.”

Gary King: He’s using words in ways that are confusing, at least in that particular quote. But let me just define some terms. One division within science is theory versus empirics. There are whole bodies of work in academia where, for lack of direct empirical observation, we have to just make assumptions about people. For example, that they pursue their own rational self-interests. Well, a lot of the time they do pursue their own rational self-interest, but a lot of the times they don’t. If you had to make an assumption about people, maybe that’s a good one to start with, but if you have data, you don’t have to. You can go figure out what they’re doing in particular instances.

So, the “End of Theory”? Of course it’s not the end of theory. But the balance between theory and empirics is shifting toward empirics in a big way. That’s always the case in areas where there’s a lot of data. Does that make the scientific method obsolete? No — that’s absurd. Science is about inference, using facts you have to learn about facts you don’t have. So if you have more facts, you don’t have to make as many inferences as you would otherwise.

So, the “End of Theory”? Of course it’s not the end of theory. But the balance between theory and empirics is shifting toward empirics in a big way. That’s always the case in areas where there’s a lot of data. Does that make the scientific method obsolete? No — that’s absurd. Science is about inference, using facts you have to learn about facts you don’t have. So if you have more facts, you don’t have to make as many inferences as you would otherwise.

It’s never going to be the case that there’s no inference, and by definition, it’s never going to be the case that we’re not going to need science. All the data revolution is influencing is how much empirical evidence we have to bring to bear on a subject. Nobody says in astronomy when we get a better telescope that we don’t need theories of how things work out there. We just got some more evidence, that’s great.

JR: Journalism professor Steve Doig referred to data journalism as “social science on deadline.” What can journalists analyzing data do to make sure that these analyses are understandable?

Gary King: I think ultimately there is no line between journalists and social scientists. Nor is it true that journalists are less sophisticated than social scientists. And it is not true that social scientists totally understand whatever method they should know in order to access some new dataset. What matters in the end is that whatever conclusions you draw have the appropriate uncertainty attached to them. That’s the most important thing.

The worst phrase ever invented is “That’s not an exact science.” That is a sentence that makes no sense. The whole point of science is that you’re making inferences about things that we’re not really sure of. So the only relevant thing to express is the appropriate level of uncertainty with our inferences.

Sometimes we have a shorter deadline. That’s true in journalism and in social science as well. No matter what, in the end there’s always some data we don’t have. In the end, there’s always some uncertainty about the conclusion that we’re going to draw. And the more interesting, the more innovative, the more cutting edge the subject is we’re analyzing, the more uncertainty we’re going to have. And that’s just the breaks.

And so what makes us — I would say scientists; journalists maybe don’t like to call themselves scientists, but I’m happy to — all doing the right thing is expressing the appropriate degree of uncertainty with respect to our conclusions. So I don’t see any difference between journalists and social scientists. I see the same continuum within journalism and within social science.

Tags: research chat, data journalism

Expert Commentary