When we ask a question, test a hypothesis or question a belief, we often exhibit confirmation bias. We are more likely to search for evidence that confirms than disconfirms the question, hypothesis or belief we are testing. We also interpret evidence in ways that confirm rather than disconfirm that question, hypothesis or belief, and we are more likely to perceive confirming evidence to be more important than disconfirming evidence. Confirmation bias influences the questions we explore in both our professional and personal lives, whether our investigation is on behalf of an audience of millions or an audience of one.

In journalism, confirmation bias can influence a reporter’s assessment of whether a story is worth pitching and an editor’s decision to greenlight a story pitch. If the pitch is accepted, it can determine the questions the reporter decides to ask — or declines to ask — while investigating the story. It can affect an editor’s choice to assign certain stories to one reporter versus another. And it can leave journalists susceptible to deliberate misinformation campaigns.

As a behavioral scientist, my research examines the causes and consequences of cognitive biases and develops interventions to reduce them. I have found that susceptibility to cognitive biases is not an immutable property of the mind. It can be reduced with training that targets specific biases. Debiasing training interventions teach people about biases like confirmation bias. They can also give examples, feedback and practice and offer actionable strategies to reduce each bias, which can improve professional judgments and decisions, from intelligence analysis to management.

Many of the same cognitive biases apply to journalism. I believe debiasing training can help journalists recognize and reduce the influence of cognitive biases in their own judgments and decisions, and see it in the actions and statements of the people they cover in their stories. Take for example this audio clip in which Pulitzer Prize-winning New York Times reporter Azmat Khan discusses how confirmation bias affected decisions about U.S. air strikes in the Middle East.

Confirmation bias in coverage of the Iraq War

In 2002, during the buildup to the United States’ invasion of Iraq, major newspapers including The Washington Post, The New York Times, The Los Angeles Times, The Guardian, The Daily Telegraph and The Christian Science Monitor ran a total of 80 front-page articles on weapons of mass destruction, between Oct. 11 and 31 alone. Many exhibited confirmation bias, as revealed by a 2004 analysis by Susan Moeller, a professor of media and international affairs and director of the International Center for Media and the Public Agenda at the University of Maryland. By contrast to earlier careful distinctions made by these papers between terrorism and the acquisition of WMD during 1998 tensions between India and Pakistan, Moeller found that these articles broadly accepted the linkages between terrorism and WMD made by the Bush administration and its assertions that war with Iraq would prevent terrorists from gaining access to WMD. The articles tended to lead with the White House’s perspective. Alternative perspectives and disconfirming evidence tended to be placed farther down in the story or buried.

How does confirmation bias work?

Confirmation bias often takes the form of positive test strategy. When testing a hypothesis, belief or question, there are usually four kinds of evidence to consider: evidence for and against that hypothesis, belief or question, and evidence for and against alternative hypotheses or its negative (i.e., that the hypothesis being tested is wrong). We exhibit confirmation bias when we search for and prioritize evidence that would confirm our main hypothesis and disconfirm its alternatives or negative and when we ignore or brush off evidence that refutes our main hypothesis and confirms its alternatives or negative.

Consider a reporter investigating whether SARS-CoV-2 was manufactured and leaked from a laboratory in Wuhan, China, rather than had a zoonotic origin. The journalist might be more likely to report on or feature the physical proximity of the Wuhan Institute of Virology to the Huanan Market, where live animals were sold and most of the earliest cases were found, research on bat Coronaviruses conducted at the Institute, or mention Chinese government attempts to deny that live animals were sold at the market or the potentially erroneous first case reported 30 km from the Huanan Market. That journalist might be less likely to emphasize that most early cases were tied to the Huanan Market, that similar infections were found in raccoon dogs sold there and in nearby animal markets, that the structure of SARS-CoV-2 is not optimized for human transmission, and the profound impact that SARS-CoV-2 has had on the Chinese economy.

| Hypotheses | Confirming Evidence | Disconfirming Evidence |

| Lab Leak (Focal Hypothesis) | a. Physical proximity of the Wuhan Institute of Virology and Huanan Market Research examining transmissibility of bat coronaviruses to humans at the Wuhan Institute of Virology | b. First cases not linked to workers at the Wuhan Institute of Virology Severe economic impact on China |

| Zoonotic Origin (Alternative Hypothesis) | c. Preponderance of early cases linked to workers at Huanan Market in Wuhan, China Evidence of SARSr-CoVs found in raccoon dogs sold in the Huanan Market Geneotype of SARSr-CoV-2 not optimized for human transmission | d. Chinese government denial that Huanan Market sold live animals. Earliest case reported 30 km from Huanan Market |

We are prone to confirmation bias when intrinsic or extrinsic factors prompt us to focus on or test a particular hypothesis. Confirmation bias can be induced by our own values and motives (e.g., values, fears, politics, economic incentives), and by the way in which a problem or a question is framed.

Confirmation bias in the laboratory

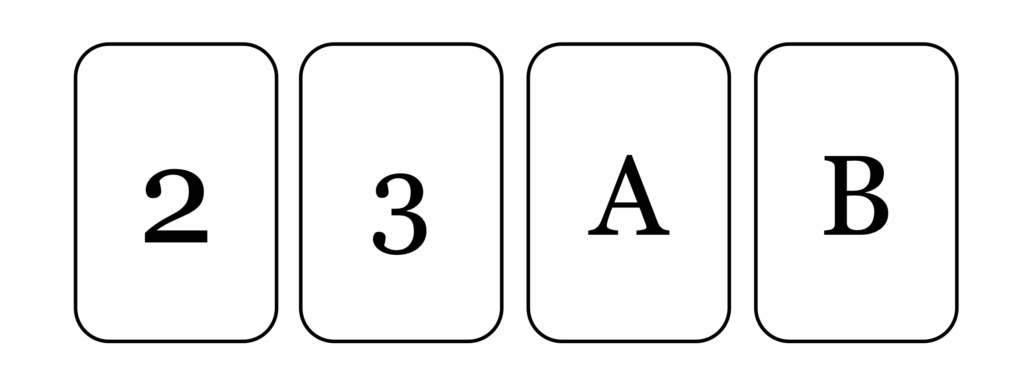

Empirical evidence for confirmation bias in information search dates back to Peter Wason’s research from the 1960s on the psychology of how people test rules. The most famous example is his Card Selection task (sometimes called the four-card task). Research participants are shown four cards and are asked which two cards they should turn to test a rule. You can try it out right here.

Which two cards would you turn to test the rule, “All cards with an even number on one side have a vowel on the other side”?

Most of us choose cards 2 and A, and both cards have the potential to confirm the hypothesis. If you turned over the 2 and found a vowel, that would confirm your hypothesis. If you turned over the A and found an even number, that would confirm your hypothesis. However, we would need to turn over the 2 and B cards to test whether this set of cards disconfirm the rule. Only if an even number is paired with a consonant is the rule violated. If you turned over the 2 and found a Z, for instance, or turned over the B and found a 4, then you would know the rule was wrong.

Another way we exhibit confirmation bias is in the kinds of questions we choose to ask, research shows. Many questions can only provide evidence that supports the hypothesis they test (e.g., “What makes you the right person for this job?”). We tend to be unbalanced in the number of questions we ask that would confirm or disconfirm our hypotheses.

In a classic 1978 paper, psychologists Mark Snyder and William Swann asked 58 undergraduate women at the University of Minnesota to test whether another participant fit a personality profile. Half tested whether their partner was an extravert. The other half tested whether their partner was an introvert.

To perform this test, each participant was shown a list of 26 questions and told to pick the 12 questions that would help them best test the hypothesis about their partner’s personality. The full list of questions fell into three categories. Eleven questions were the kind people usually ask of people they know are extraverts (“What kind of situations do you seek out if you want to meet new people?”). Ten questions were the kind people usually ask of people they know are introverts (“In what situations do you wish you could be more outgoing?”). The last five questions were irrelevant to extraversion and introversion (e.g., “What kind of charities do you like to contribute to?”).

Participants testing for evidence of extraversion were 52% more likely than participants testing for evidence of introversion to ask questions that would confirm extraversion. By contrast, participants testing for evidence of introversion were 115% more likely than participants testing for evidence of extraversion to ask questions that would confirm introversion.

Evidence that confirmation bias influences how we interpret and weigh information comes from research on framing effects in decision making. Framing effects occur when the way you consider a decision (e.g., by choosing versus rejecting one of two options), changes which option you choose. Imagine you are a judge deciding a child custody case between two parents getting a divorce, Parents A and B. Parent A gets along well with the child and is average in terms of income, health, working hours, and other characteristics typical of adults in the United States, whereas the characteristics of Parent B are more extreme. Parent B earns and travels for work more, has an active social life, minor health problems, and is very close with the child. How you decide between these two parents might depend on the frame through which you ask the question.

| Award | Deny | ||

| Parent A | Average income Average health Average working hours Reasonable rapport with the child Relatively stable social life | 36% | 45% |

| Parent B | Above-average income Very close relationship with the child Extremely active social life Lots of work-related travel Minor health problems | 64% | 55% |

In 1993, psychologist Eldar Shafir showed 170 Princeton University undergraduates the information above and asked them to make a decision. He asked half to whom they would award custody. A majority (64%) awarded custody to Parent B. He asked the other half of participants to whom they would deny custody. A majority (55%) also denied custody to Parent B! How did the frame lead more participants to both award and deny custody to Parent B? The way the question was framed (award versus deny) led participants to change the way they interpreted and weighed the evidence. Participants who chose a parent in the “award frame” focused on positive features of the parents, which favored parent B. Participants who rejected a parent in the “deny frame” focused on negative features of the parents, which favored Parent A.

Confirmation bias in the newsroom

Investigative journalism can be influenced by confirmation bias at many stages. Confirmation bias can influence which stories editors and journalists decide are likely to be worth reporting. It can influence which journalists are assigned to stories (those who share the cognitive biases of their editor and, therefore, also believe the stories to be important and newsworthy). Most important, it can influence how they collect evidence and transform that evidence into information for the public. Confirmation bias can influence what data is gathered and featured, which sources are interviewed and deemed credible, how evidence and quotes are interpreted and analyzed, which aspects of the story are featured prominently, which are downplayed and which are removed.

Whether a news organization decided to report on the SARS-CoV-2 lab leak hypothesis, for instance, was a decision made by its editors who greenlight the stories and the journalist(s) who reported it. Journalists made decisions about what evidence they deemed credible and worth reporting to the public, which sources to interview, trust and quote, and how to contrast evidence for a lab leak against evidence for zoonotic or other origins (if those alternatives were present at all).

Tips to reduce confirmation bias

Confirmation bias can be reduced with interventions that range from simple decision strategies to more intensive training interventions. A simple strategy one can apply immediately is when testing a hypothesis, make sure to test if alternatives or its negative are true (a “consider-the-opposite” strategy). Our justice system assumes that a person is innocent until proven guilty, but many jurors, investigators, judges and the public do not. Most presume guilt. Asking ourselves to explicitly consider whether an accused person is innocent can increase our propensity to consider evidence that challenges their criminal case.

When reporting on a story, remember that people’s default is to adopt a positive test strategy. Remember to examine the neglected diagonal — evidence disconfirming the main hypothesis and confirming its alternatives.

New research by my collaborators and me finds that even one-shot debiasing training interventions can help people recognize confirmation bias and reduce its influence on their own judgments and decisions, in the short and long term.

In a 2015 study, we brought 278 Pittsburghers into the laboratory. Each participant completed a pretest consisting of three tests of their susceptibility to confirmation bias and two other biases (i.e., bias blind spot and correspondence bias). One-third of the participants then watched a 30-minute training video developed by IARPA (the Intelligence Advanced Research Projects Activity). In the video, a narrator defines biases, actors demonstrate in skits how a bias might influence a judgment or decision, and then strategies are reviewed to reduce the bias. You can watch it here:

The other two-thirds of participants played a 90-minute “serious” detective game. The game elicited each of the three biases during game play. At the end of each level, experts described the three biases and gave examples of how they influence professional judgments and decisions. Participants then received personalized feedback on the biases they exhibited during game play and strategies to mitigate the biases. They also practiced implementing those strategies.

After completing one of these two interventions, all participants completed a post-test that included scales measuring how the interventions influenced their susceptibility to each of the three biases. Two months later, participants completed a third round of bias scales online, which tested whether the intervention produced a lasting change.

This project was a long shot. Most decision scientists think that cognitive biases are like visual illusions — that we can learn that they exist, but we can’t do much to prevent or reduce them. What we found was striking. Whether participants watched the video or played the game, participants exhibited large reductions in their susceptibility to all three cognitive biases both immediately and even two months later.

In a 2019 paper with Anne-Laure Sellier and Irene Scopelliti — the Cartier-chaired professor of creativity and marketing at HEC Paris and a professor of marketing and behavioral science in the Bayes Business School at City, University of London, respectively — I found that debiasing training can improve decision making outside the laboratory, when people are not reminded of cognitive biases and do not know that their decisions are being observed. We conducted a naturalistic experiment in which 318 students enrolled in Masters degree programs at HEC Paris played our serious game once across a 20-day period.

We surreptitiously measured the extent to which the game influenced their susceptibility to confirmation bias by inserting a business case based on a real-world event, with which we measured their susceptibility to confirmation bias, into their courses. Students did not know that the course and game were connected.

Business cases are essentially a role playing game or simulation of a problem that business leaders might face. Students are presented with the problem and evidence (e.g., data, opinions of different employees and managers). They then conduct an analysis and decide their best course of action under those circumstances.

We found that students who played the debiasing training game before doing the case were 19% less likely to make an inferior hypothesis-confirming decision in the case (than participants who played the debiasing training game after doing the case.

These experiments give us hope that debiasing training can work. There is still much exciting work to be done to see when and how debiasing training interventions reduce cognitive biases and which features of these and other interventions are most effective.

Cognitive biases like confirmation bias can help us save time and energy when our initial hypotheses are correct, but they can also create catastrophic mistakes. Learning to understand, spot and correct them — especially when the stakes are high — is a valuable skill for all journalists.

Expert Commentary