Journalists often make mistakes when reporting on data such as opinion poll results, federal jobs reports and census surveys because they don’t quite understand — or they ignore — the data’s margin of error.

Data collected from a sample of the population will never perfectly represent the population as a whole. The margin of error, which depends primarily on sample size, is a measure of how precise the estimate is. The margin of error for an opinion poll indicates how close the match is likely to be between the responses of the people in the poll and those of the population as a whole.

To help journalists understand margin of error and how to correctly interpret data from polls and surveys, we’ve put together a list of seven tips, including clarifying examples.

- Look for the margin of error — and report it. It tells you and your audience how much the results can vary.

Reputable researchers always report margins of error along with their results. This information is important for your audience to know.

Let’s say that 44 percent of the 1,200 U.S. adults who responded to a poll about marijuana legalization said they support legalization. Let’s also say the margin of error for the results is +/- 3 percentage points. The margin of error tells us there’s a high probability that nationwide support for marijuana legalization falls between 41 percent and 47 percent.

- Remember that the larger the margin of error, the greater the likelihood the survey estimate will be inaccurate.

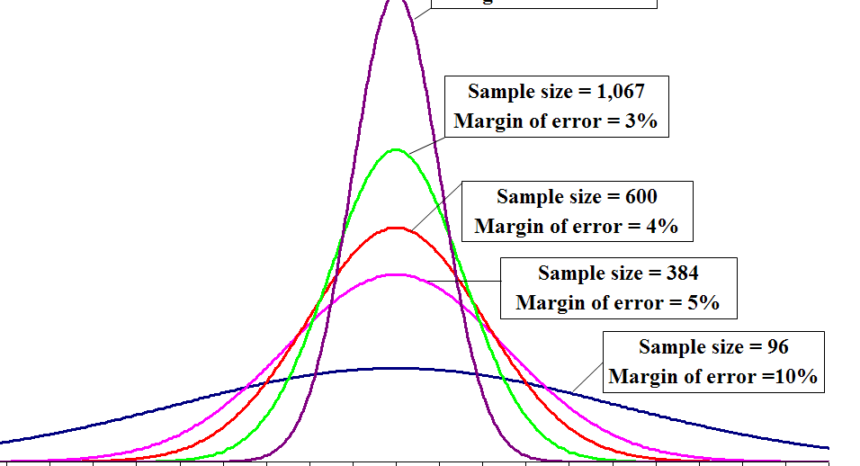

Assuming that a survey was otherwise conducted properly, the larger the size of a sample, the more accurate the poll estimates are likely to be. As the sample size grows, the margin of error shrinks. Conversely, smaller samples have larger margins of error.

The margin of error for a reliable sample of 200 people is +/- 7.1 percent. For a sample of 4,000 people, it’s 1.6 percent. Many polls rely on samples of around 1,200 to 1,500 people, which have margins of error of approximately +/- 3 percent.

- Make sure a political candidate really has the lead before you report it.

If a national public opinion poll shows that political Candidate A is 2 percentage points ahead of Candidate B but the margin of error is +/- 3 percentage points, journalists should report that it’s too close to tell at this point who’s in the lead.

Journalists often feel pressure to give their audience a clear-cut statement about which candidate is ahead. But in this example, the poll result is not clear cut and the journalist should say so. There’s as much news in that claim as there is in the misleading claim that one candidate is winning.

- Note that there are real trends, and then there are mistaken claims of a trend.

If the results of polls taken over a period are exceedingly close, there is no trend even though the numbers will vary slightly. To take a hypothetical example, imagine that pollsters ask a sample of Florida residents whether they would support a new state sales tax. In January, 31 percent said yes. In July, 33 percent said yes. Imagine now that each poll had a margin of error +/-2 percentage points.

If you’re a reporter covering this issue, you want to be able to tell audiences whether public support for this new tax is changing. But in this case, due to the margin of error, you cannot infer a trend. What you can say is that support for a new sales tax is holding steady at about a third of Florida residents.

- Watch your adjectives. (And it might be best to avoid them altogether.)

When reporting on data that has a margin of error, use care when choosing adjectives. Jonathan Stray, a journalist and computer scientist who’s a research scholar at Columbia Journalism School, highlights some of the errors journalists make when covering federal jobs reports in a piece he wrote for DataDrivenJournalism.net.

Stray explains: “The September 2015 jobs number was 142,000, which news organizations labelled ‘disappointing’ to ‘grim.’ The October jobs number was 271,000, which was reported as ‘strong’ to ‘stellar.’“

Neither characterization makes sense considering those monthly jobs growth numbers, released by the U.S. Bureau of Labor Statistics, had a margin of error of +/- 105,000. (It’s a reason why, when the agency later releases adjusted figures based on additional evidence, the jobs number for a month is often substantially different from was originally reported.).

Journalists should help their audiences understand how much uncertainty is in the data they use in their reporting — especially if the data is the focus of the story. Stray writes: “This is one example of a technical issue that becomes an ethics issue: ignoring the uncertainty … If we are going to use data to generate headlines, we need to get data interpretation right.”

- Keep in mind that the margin of error for subgroups of a sample will always be larger than the margin of error for the sample.

As we mentioned above, the margin of error is based largely on sample size. If a researcher surveys 1,000 residents of Los Angeles County, California to find out how many adults there have completed college, the margin of error is going to be slightly more than +/- 3 percent.

But what if researchers want to look at the college completion rate for various demographic groups — for example, black people, women or registered Republicans? In these cases, the margin of error depends on the size of the group. For example, if 200 of those sampled are from a particular demographic group, the estimate of the margin of error in their case will be roughly +/- 7 percent. Again, the margin of error in a sample depends largely on the number of respondents. The smaller the number, the larger the margin of error. That’s true whether you’re talking about the entire sample or a subset of it.

- Use caution when comparing results from different polls and surveys, especially those conducted by different organizations.

Although most polling firms have a similar methodology, polls can differ in their particulars. For example, did a telephone poll include those with cell phones or was it limited to those with a landline; was the sample drawn from registered voters only or was it based on adults of voting age; was the wording of the question the same or was it substantially different? Such differences will affect the estimates derived from a poll, and journalists should be aware of them when comparing results from different polling organizations.

If you’re looking for more guidance on polls, check out these 11 questions journalists should ask about public opinion polls.

Journalist’s Resource would like to thank Todd Wallack, an investigative reporter and data journalist on the Boston Globe’s Spotlight team, for his help creating this tip sheet.

This visualization, created by Fadethree and obtained from Wikimedia Commons, is public domain content.

How to tell good research from flawed research: 13 questions journalists should ask

Reporting on data security and privacy: Tips from Dipayan Ghosh

Expert Commentary